I am a PhD Student at the School of Computer Science and Technology, Huazhong University of Science and Technology, in the HUST Media Lab, advised by Prof. Wei Yang. My research focuses on the intersection of Computer Vision, Graphics, and Neural Rendering.

I am now a visiting student at Inception3D Lab, Westlake University, under the supervision of Prof. Anpei Chen.

Services

- Conference Reviewer: CVPR, ECCV, ICCV, NeurIPS, ICLR, ICML, ICME, AISTATS, ICASSP.

- Journal Reviewer: TPAMI

Publications

* Equal contribution. † denotes corresponding author.

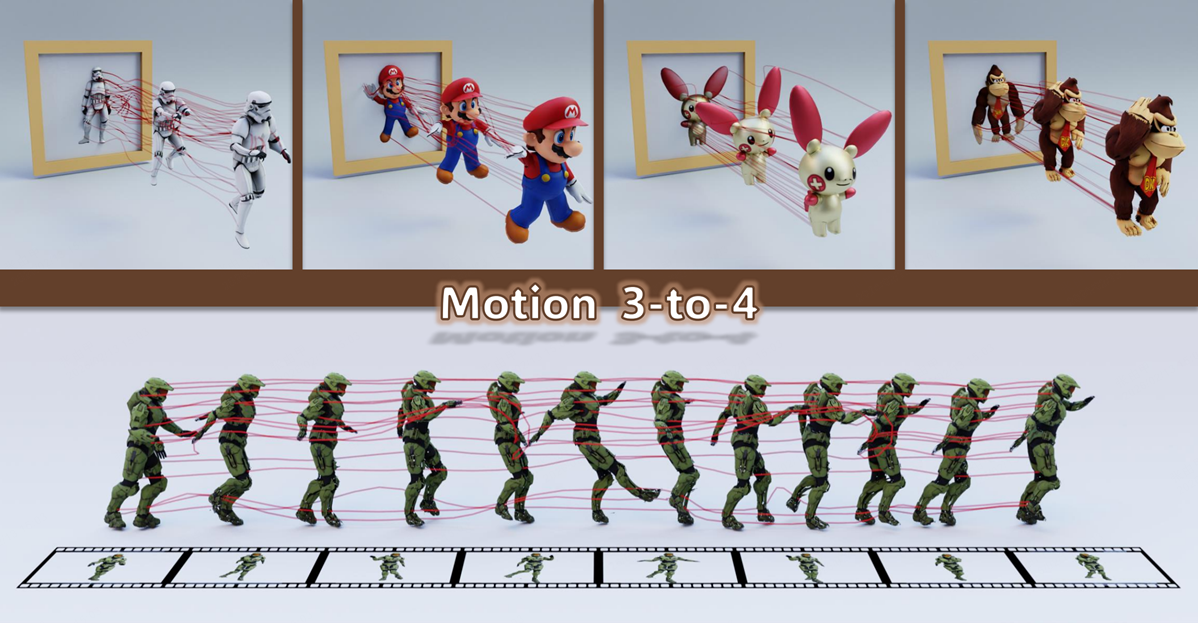

Motion 3-to-4: 3D Motion Reconstruction for 4D Synthesis

Hongyuan Chen, Xingyu Chen, Youjia Zhang, Zexiang Xu, Anpei Chen †

Computer Vision and Pattern Recognition (CVPR), 2026

- Synthesising 4D dynamic objects from single monocular video.

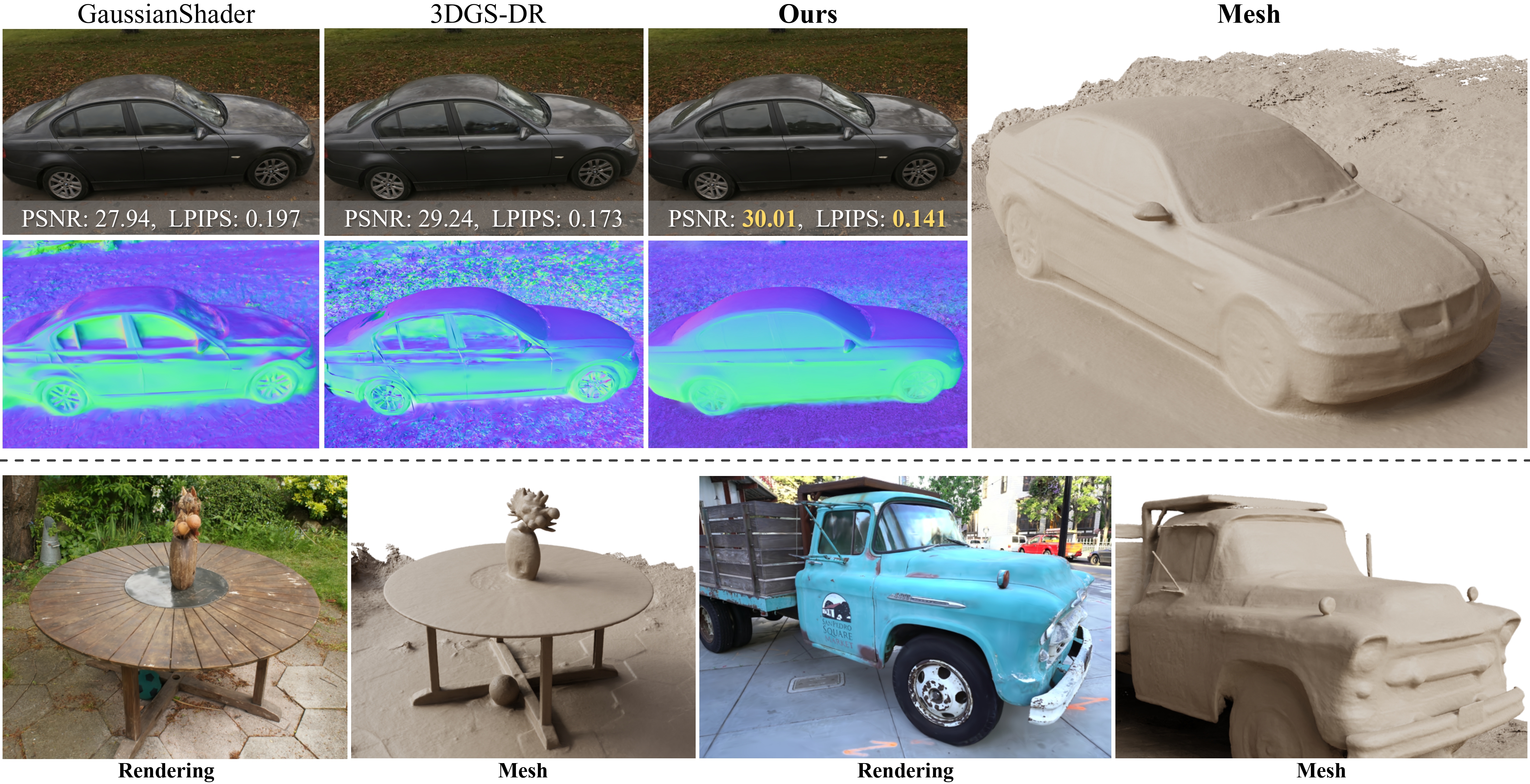

Ref-GS: Directional Factorization for 2D Gaussian Splatting

Youjia Zhang, Anpei Chen †, Yumin Wan, Zikai Song, Junqing Yu, Yawei Luo, Wei Yang †

Computer Vision and Pattern Recognition (CVPR), 2025

Project | Code | Slides | Poster

- Ref-GS builds upon the deferred rendering of Gaussian splatting and applies directional encoding to the deferred-rendered surface, effectively reducing the ambiguity between orientation and viewing angle. We introduce a spherical Mip-grid to capture varying levels of surface roughness, enabling roughness-aware Gaussian shading.

Optimized View and Geometry Distillation from Multi-view Diffuser

Youjia Zhang, Zikai Song, Junqing Yu, Yawei Luo, Wei Yang †

International Joint Conference on Artificial Intelligence (IJCAI), 2025

- We propose the USD, which achieves consistent single-to-multi-view synthesis and geometry recovery by using a radiance-field consistency prior and Unbiased Score Distillation—injecting unconditioned 2D diffusion noise to debias optimization—followed by a two-step, object-aware denoising process that yields high-quality views for accurate geometry and texture.

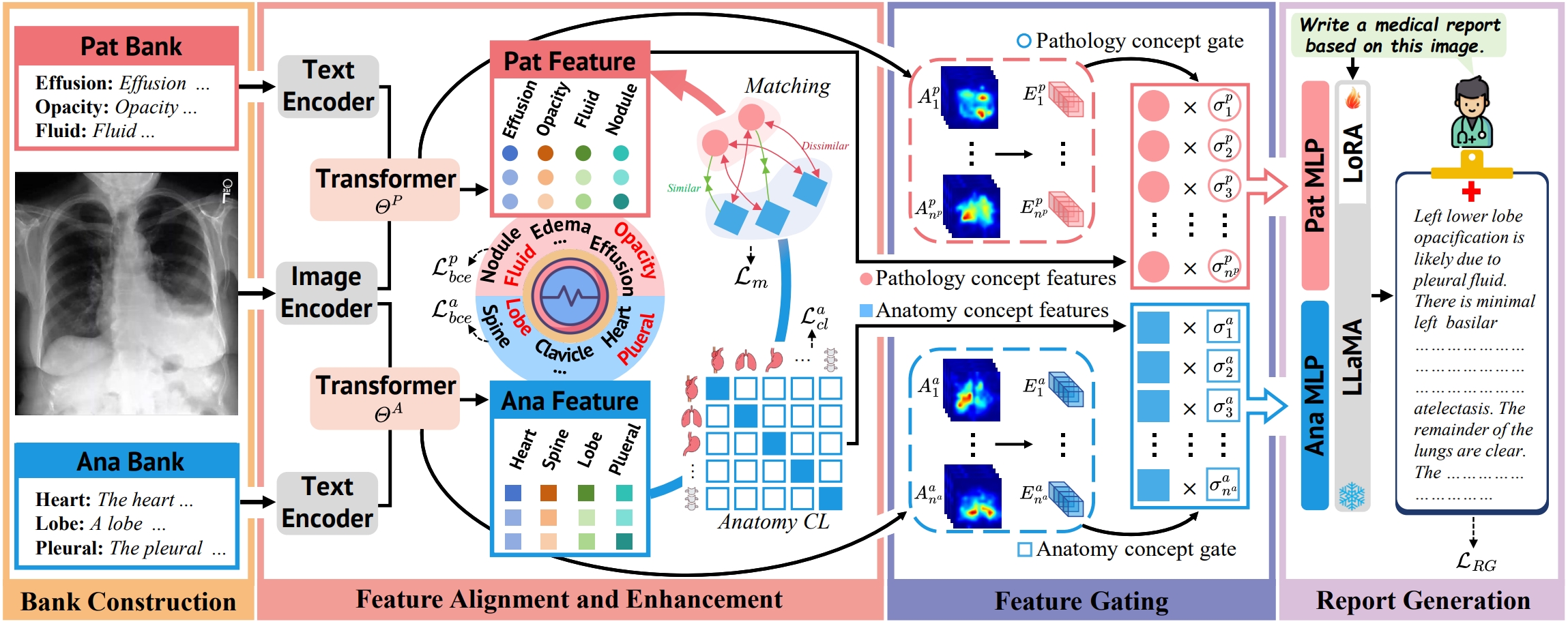

MCA-RG: Enhance LLM with Medical Concept Alignment for Radiology Report Generation

Qilong Xing, Zikai Song, Youjia Zhang, Na Feng, Junqing Yu, Wei Yang †

Medical Image Computing and Computer Assisted Intervention (MICCAI), 2025

- We propose MCA-RG, a knowledge-driven framework for radiology report generation that aligns visual features with curated pathology and anatomy concept banks. By incorporating anatomy-based contrastive learning, pathology-guided matching, and a feature gating mechanism, MCA-RG enhances diagnostic accuracy and clinical relevance. Experiments on MIMIC-CXR and CheXpert Plus demonstrate state-of-the-art performance in medical report generation.

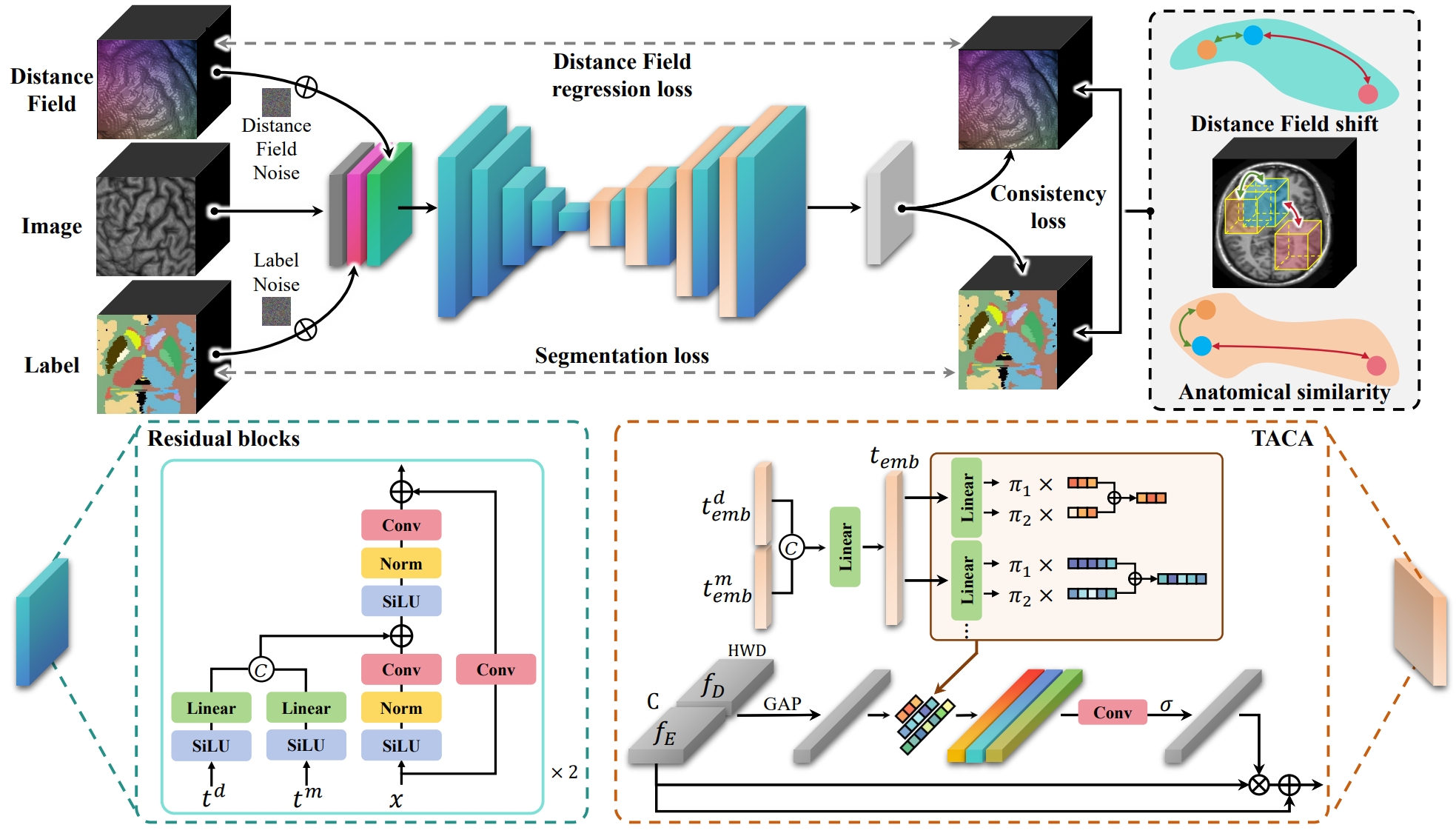

CA-Diff: Collaborative Anatomy Diffusion for Brain Tissue Segmentation

Qilong Xing, Zikai Song †, Yuteng Ye, Yuke Chen, Youjia Zhang, Na Feng, Junqing Yu, Wei Yang †

International Conference on Multimedia & Expo (ICME), 2025

- We propose Collaborative Anatomy Diffusion (CA-Diff), a framework integrating spatial anatomical features to enhance segmentation accuracy of the diffusion model.

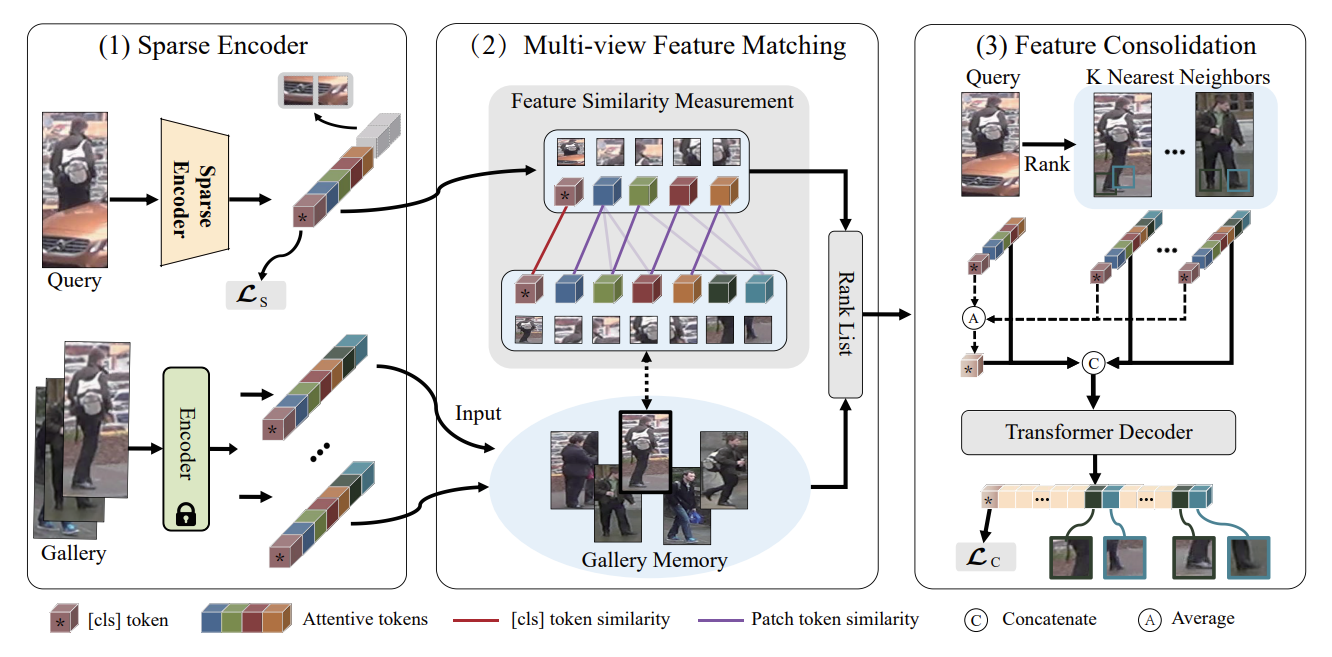

Dynamic feature pruning and consolidation for occluded person re-identification

YuTeng Ye, Jiale Cai, Chenxing Gao, Youjia Zhang, Junle Wang, Qiang Hu, Junqing Yu, Wei Yang †

AAAI Conference on Artificial Intelligence (AAAI), 2024

- We propose a Feature Pruning and Consolidation (FPC) framework to circumvent explicit human structure parse, which consists of a sparse encoder, a global and local feature ranking module, and a feature consolidation decoder.

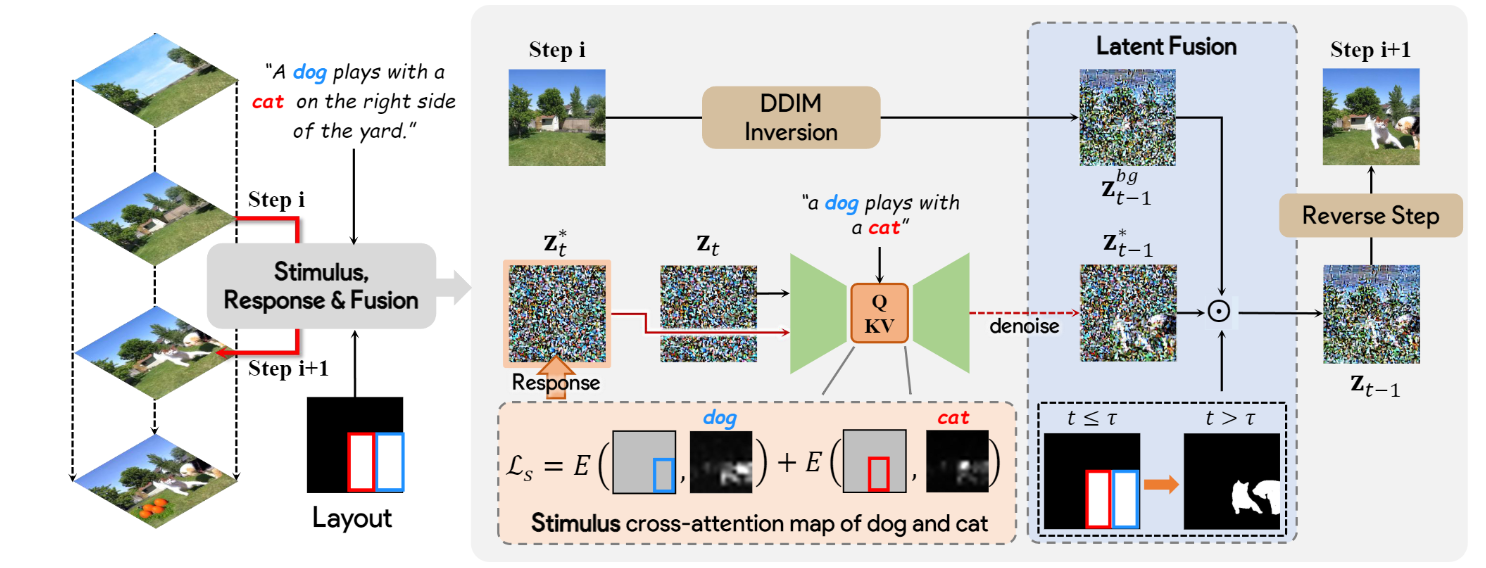

Progressive Text-to-Image Diffusion with Soft Latent Direction

YuTeng Ye, Jiale Cai, Hang Zhou, Guanwen Li, Youjia Zhang, Zikai Song, Chenxing Gao, Junqing Yu, Wei Yang †

AAAI Conference on Artificial Intelligence (AAAI), 2024

- We propose to harness the capabilities of a Large Language Model (LLM) to decompose text descriptions into coherent directives adhering to stringent formats and progressively generate the target image.

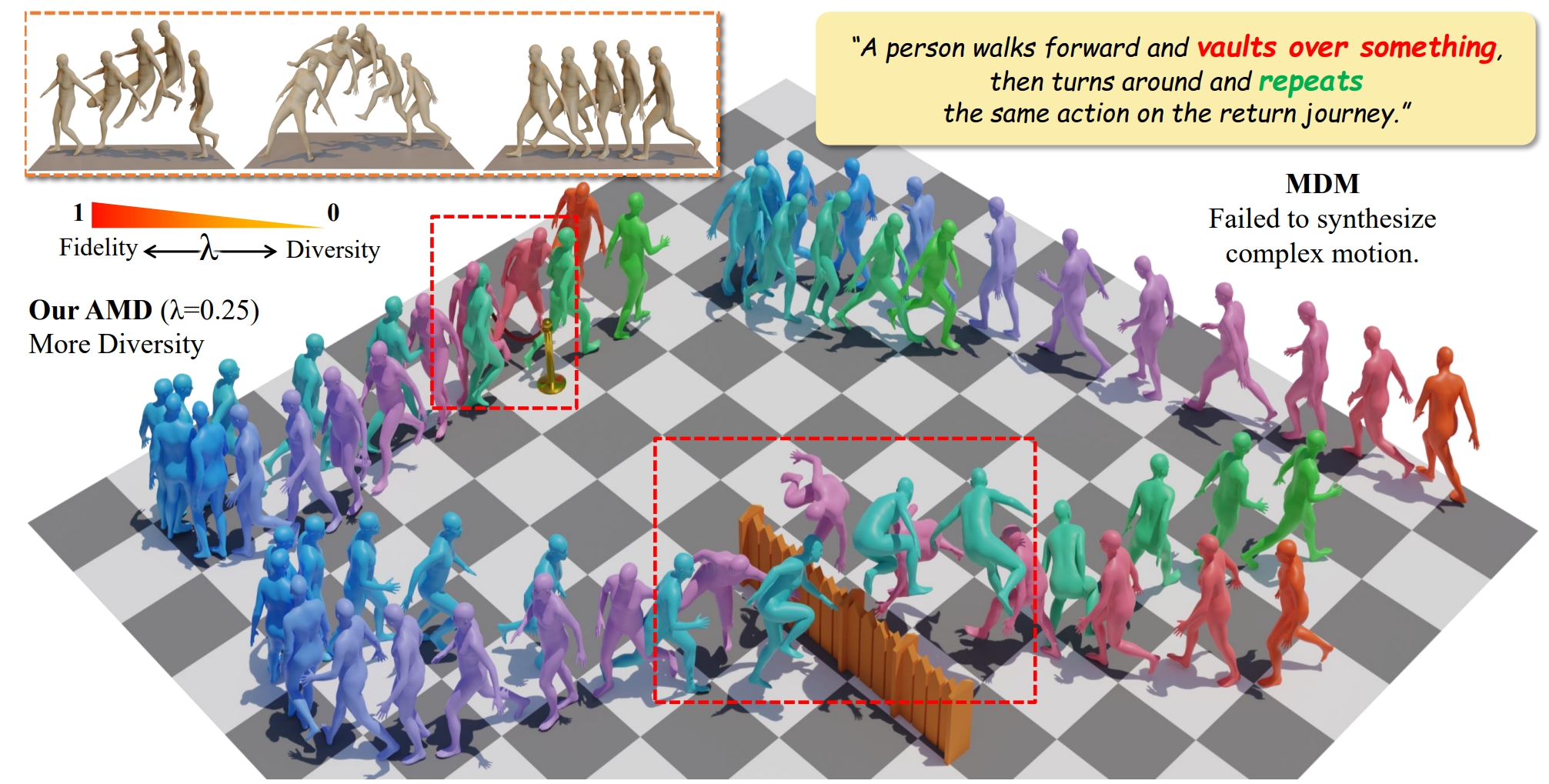

AMD: anatomical motion diffusion with interpretable motion decomposition and fusion

Beibei Jing, Youjia Zhang, Zikai Song, Junqing Yu, Wei Yang †

AAAI Conference on Artificial Intelligence (AAAI), 2024

- We propose the Adaptable Motion Diffusion (AMD) model, which leverages a Large Language Model (LLM) to parse the input text into a sequence of concise and interpretable anatomical scripts that correspond to the target motion.

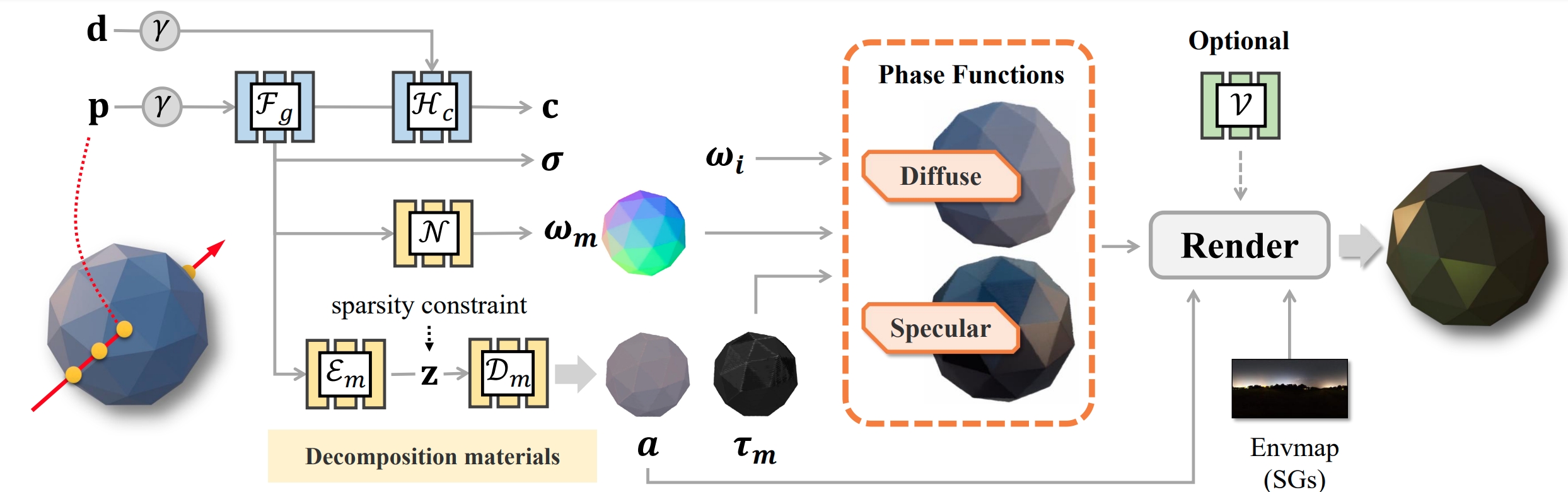

NeMF: Inverse volume rendering with neural microflake field

Youjia Zhang, Teng Xu, Junqing Yu, Yuteng Ye, Yanqing Jing, Junle Wang, Jingyi Yu, Wei Yang †

International Conference on Computer Vision (ICCV), 2023

- We propose to conduct inverse volume rendering by representing a scene using microflake volume, which assumes the space is filled with infinite small flakes and light reflects or scattersat each spatial location according to microflake distributions.

Highly accurate and large-scale collision cross sections prediction with graph neural networks

Renfeng Guo*, Youjia Zhang*, Yuxuan Liao*, Qiong Yang, Ting Xie, Xiaqiong Fan, Zhonglong Lin, Yi Chen, Hongmei Lu †, Zhimin Zhang †

Communications Chemistry (JCR Q1), 2023

- We present SigmaCCS, a graph neural network-based method for CCS prediction from 3D conformers. It achieves high accuracy and chemical interpretability, enabling large-scale in-silico CCS estimation.

Honors and Awards

- 2023: China National Scholarship (Top 1%).

- 2021: Outstanding Graduates of Central South University.

Educations

- 2017.09 - 2021.06, Undergraduate, Central South University.

- 2021.09 - 2023.06, Master, Huazhong University of Science and Technology.

- 2023.09 - Present, Ph.D, Huazhong University of Science and Technology.